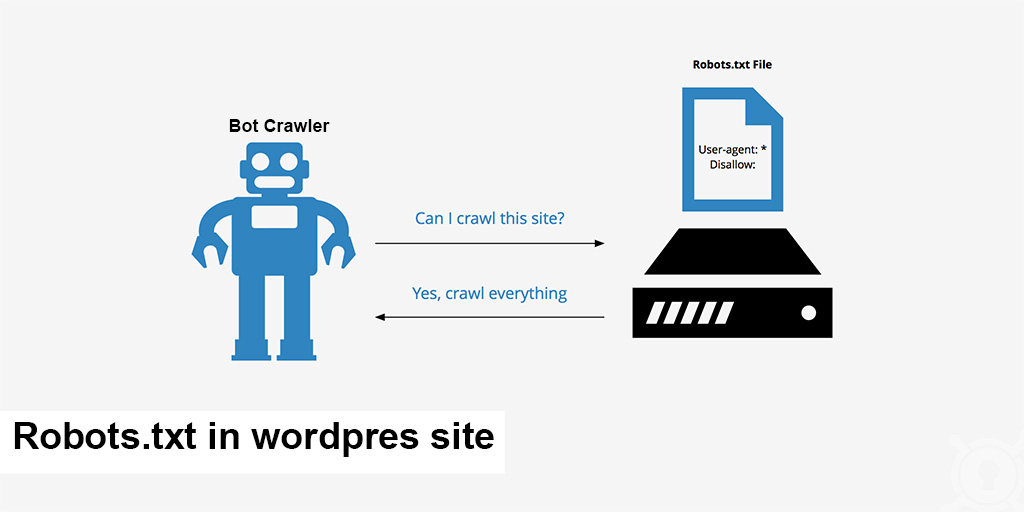

The robots.txt file is an essential tool for managing how web crawlers interact with your site. Located at the root of your domain, this simple text file instructs search engine robots on which files and folders to avoid.

What is the robots.txt File?

Web crawlers, like Googlebot, are programs that visit your site and follow its links to learn about your pages. They typically check the robots.txt file before crawling your site to see if they have permission and if there are specific areas they should not access.

The robots.txt file should be placed in the top-level directory of your domain, such as example.com/robots.txt. To edit it, log in to your web host via a free FTP client like FileZilla and use a text editor such as Notepad (Windows) or TextEdit (Mac). If you’re unsure how to log in via FTP, contact your web hosting company for assistance. Alternatively, some plugins, like Yoast SEO, allow you to edit the robots.txt file directly from your WordPress dashboard.

How to Disallow All Bots

If you want to prevent all bots from crawling your site, add the following code to your robots.txt file:

User-agent: *

Disallow: /The User-agent: * directive applies to all robots, and Disallow: / blocks access to your entire website. However, be cautious with this setting on a live site, as it can remove your site from search engines, causing a loss of traffic and revenue.

How to Allow All Bots

By default, robots.txt works by excluding specific files and folders. If you want all bots to crawl your entire site, you can leave the file empty or not have a robots.txt file at all. Alternatively, use this code to explicitly allow all:

User-agent: *

Disallow:This indicates that nothing is disallowed, so all areas are accessible.

How to Disallow Specific Files and Folders

To block specific files and folders, use the Disallow: command for each item. For example:

User-agent: *

Disallow: /topsy/

Disallow: /crets/

Disallow: /hidden/file.htmlIn this case, all bots can access everything except the two subfolders and the single file listed.

How to Disallow Specific Bots

To block a specific bot, use its user-agent name:

User-agent: Bingbot

Disallow: /

User-agent: *

Disallow:This example blocks Bing’s search engine bot while allowing other bots to crawl everything. You can use the same approach for other bots like Googlebot.

A Good robots.txt File for WordPress

Here’s a sample robots.txt file for WordPress:

User-agent: *

Disallow: /wp-admin/

Allow: /wp-admin/admin-ajax.php

Sitemap: https://huypham.work/sitemap.xmlThis configuration blocks all bots from accessing the /wp-admin/ folder except for the admin-ajax.php file. Including the Sitemap: directive helps bots find your XML sitemap, which lists all your site’s pages.

When to Use Noindex Instead of robots.txt

If you want to prevent specific pages from appearing in search engine results, using robots.txt isn’t the best method. Search engines can still index files blocked by robots.txt, though they won’t show useful metadata. Instead, use the noindex tag to block indexing. In WordPress, go to Settings -> Reading and check “Discourage search engines from indexing this site” to add a noindex tag to all your pages:

<meta name='robots' content='noindex,follow' />You can also use SEO plugins like Yoast or The SEO Framework to apply noindex to specific posts, pages, or categories.

When to Block Your Entire Site

To block your entire site from both bots and people, use a password-protection plugin like Password Protected. This is more secure than relying solely on robots.txt.

Important Facts About robots.txt

Keep in mind that not all robots will follow the instructions in your robots.txt file, especially malicious bots. Additionally, anyone can view your robots.txt file to see what you’re trying to hide. To ensure your robots.txt file is working correctly, use Google Search Console for testing.

Conclusion

The robots.txt file is a powerful tool for managing how bots interact with your site. It can be helpful for blocking certain areas or preventing specific bots from crawling your site. However, use it with caution, as mistakes can have significant consequences, like blocking all robots and losing search traffic. Always double-check your settings to avoid unintended issues.